Content Moderation Meaning: What Is It & How Does It Work?

Jan 10, 2026

Jan 10, 2026

Jan 10, 2026

Jan 09, 2026

Jan 09, 2026

Jan 08, 2026

Jan 08, 2026

Jan 07, 2026

Jan 07, 2026

Sorry, but nothing matched your search "". Please try again with some different keywords.

As the digital world evolves, online platforms are opening doors to users to express their minds without filters.

But not all expressions are positive. Some can be quite harmful and unnecessarily rude.

That’s why moderation of the user-generated content is a must in this digital era. This helps in keeping the online space a safe environment.

So, content moderators come together to review, monitor, and manage all kinds of content on diverse online platforms. Ensuring the community feels safe and positive.

But the real question is— “How does content moderation work?”

Well, no need to look any further. I have got exactly what you need. Here, in this blog, I have delved into the content moderation meaning, process, types, pros and cons, and more.

Stay tuned!

Firstly, let me break it down to you that content moderation meaning is not complex. That is, it simply refers to the reviewing and managing of the user-generated content.

So, it ensures the content adheres to the community guidelines, legalities, and policies of the social media platforms.

Generally, content moderation is done to prevent the spread of harmful content or inappropriate material. So, the digital space becomes a safe place for the community.

Now, this content moderation can be done in two ways— manually or using AI tools.

That is, human moderators can manually review and monitor the content. Alternatively, AI automation can be used to speed up the reviewing process.

In addition to this, both methods can be combined into a hybrid model, where the content is reviewed by AI tools under human supervision.

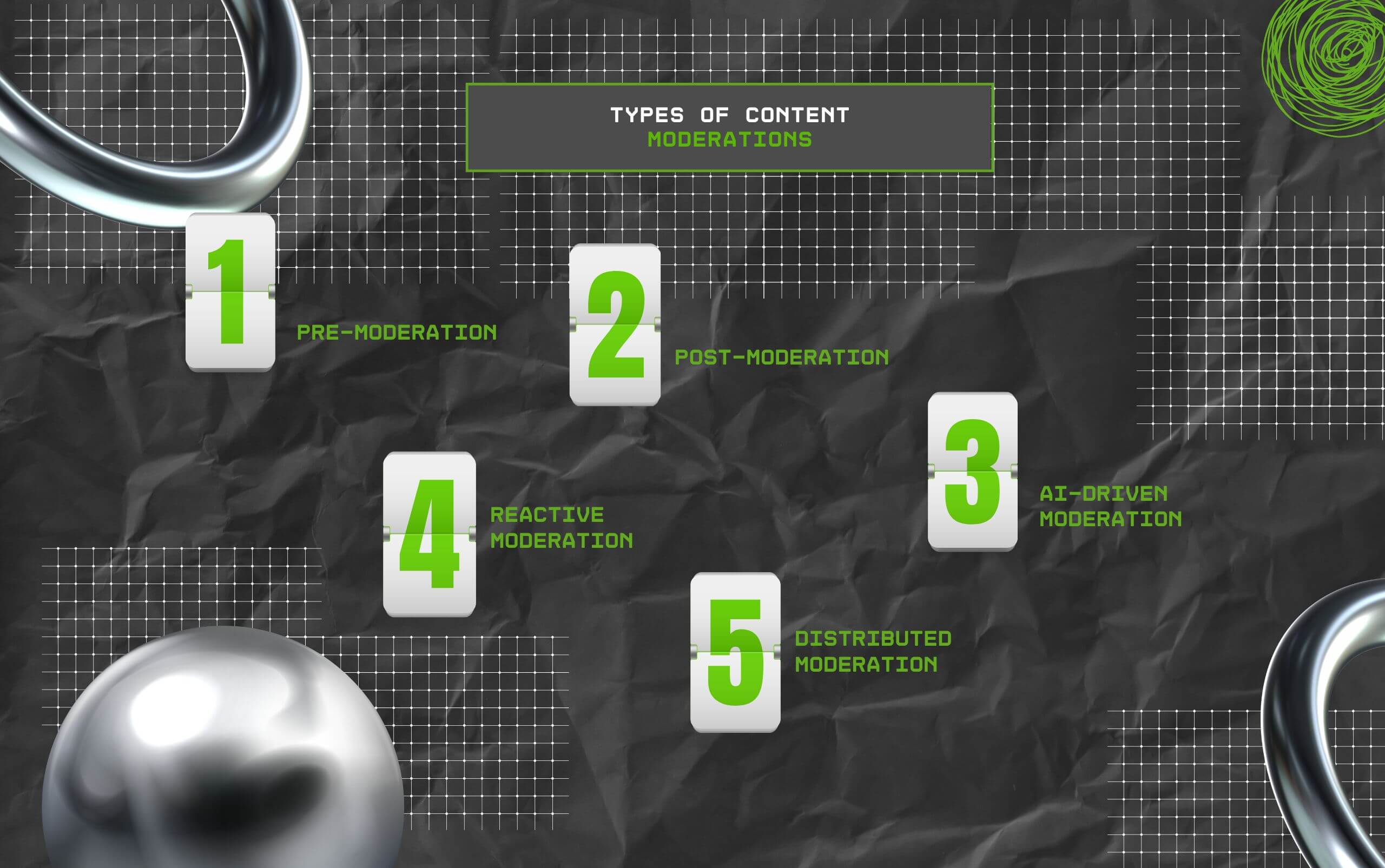

Given that diverse platforms come with different sizes, risk levels, and the nature of user interaction, a single type of content moderation does not fit all.

So, here are some of the common content moderation types—

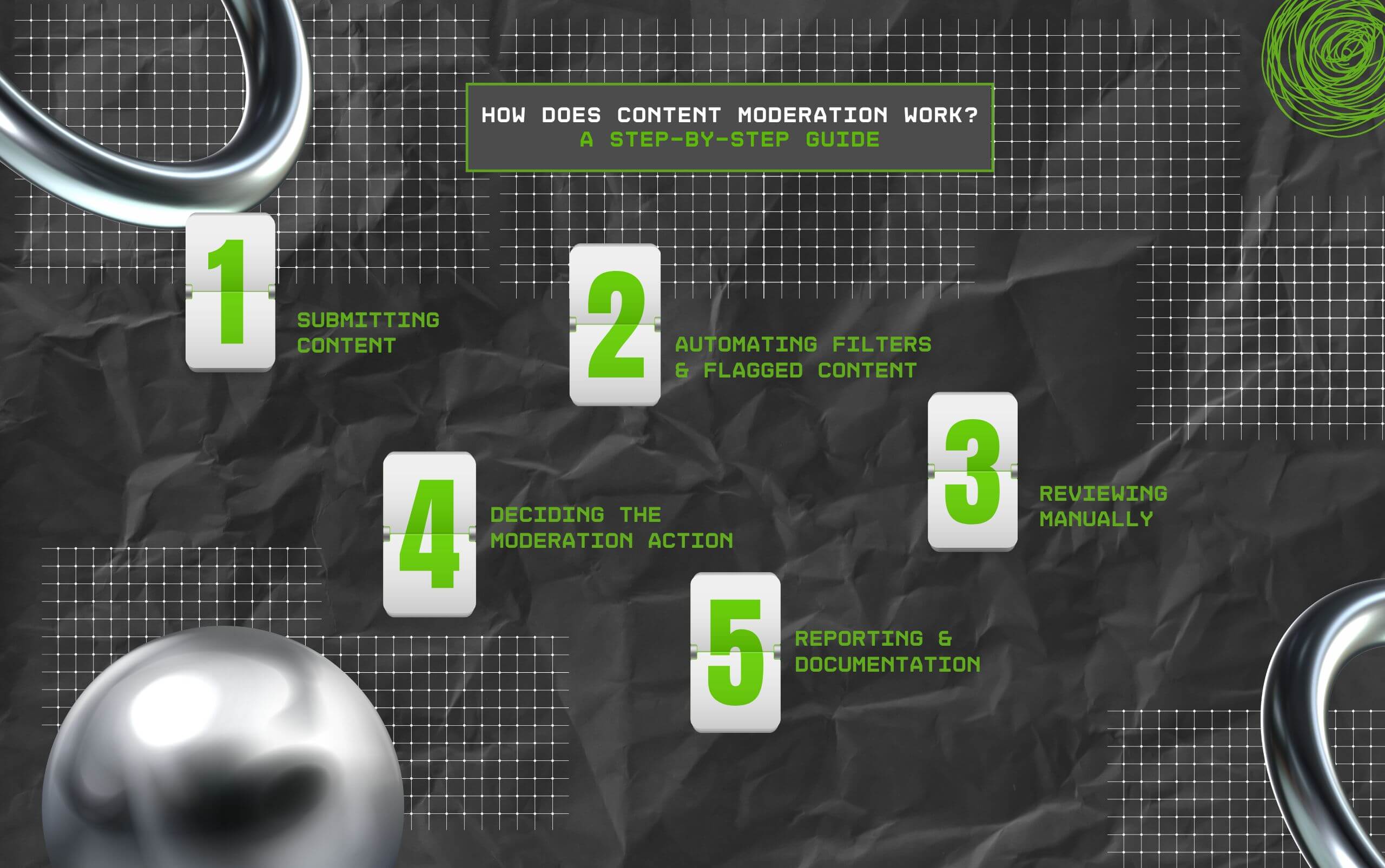

Usually, content moderation follows a structured workflow. So, here are the steps to review and manage the user-generated content on online platforms—

Firstly, the content should be submitted on the online platforms. That is, posts, images, comments, or even videos need to be published on the platforms to start the moderation.

Then, using AI tools, flagged content can be filtered out automatically. So, the tools can filter out content containing certain keywords, images, or non-compliant elements.

After that, the content can be manually reviewed to ensure that the AI models detected the flagged elements accurately. This way, content with falsely detected issues can be managed.

Now, once the flagged content is detected, the moderator can take the necessary decision on action. That is, whether the content will be removed, further investigated, or edited.

Finally, after the decision is made, the user should be notified about the content removal. Then, the moderation actions should be documented for future compliance reference.

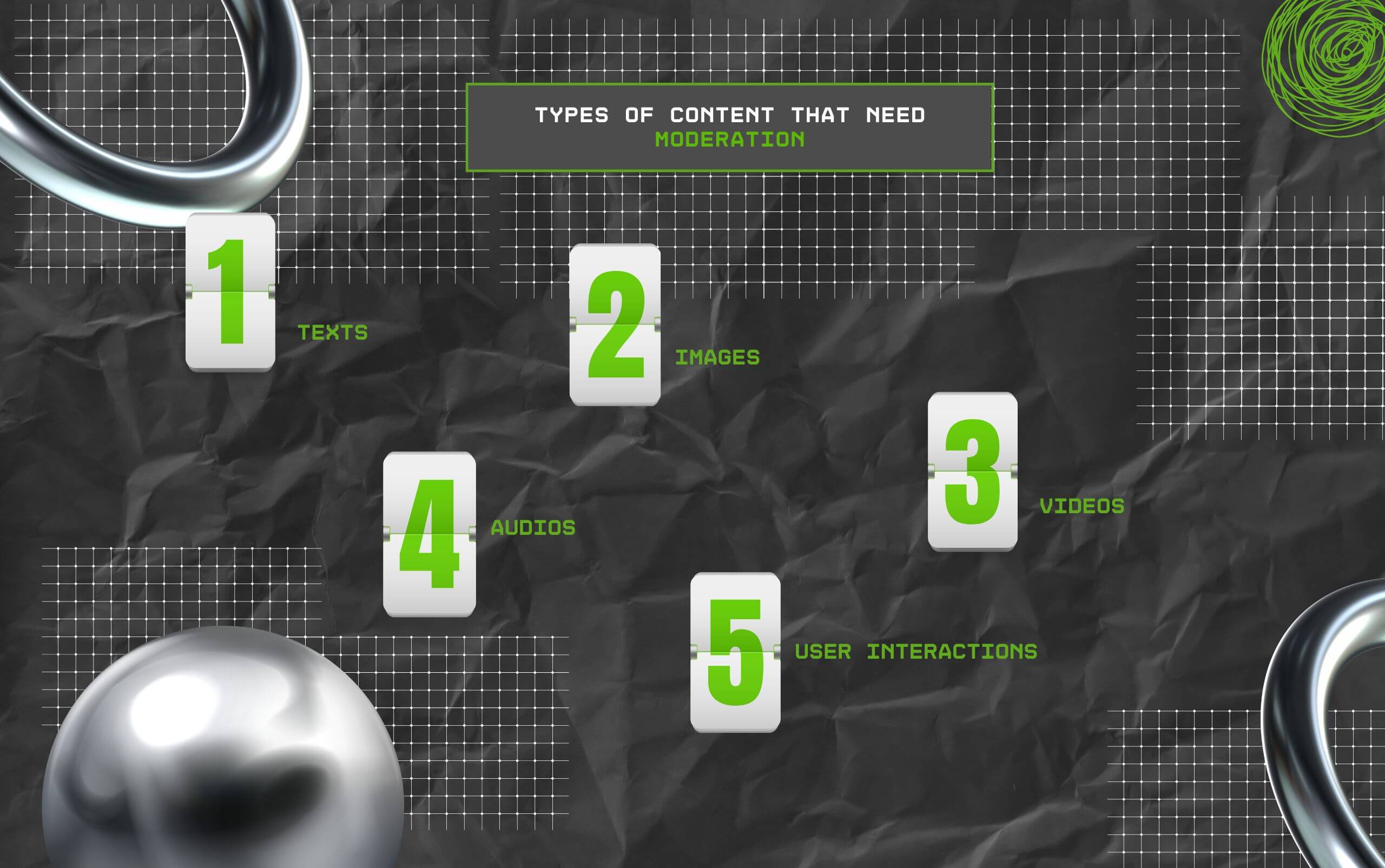

It is no news that social media platforms consist of diverse kinds of content. So, moderation is needed for these types of content—

Although content moderation offers massive benefits, it comes with some challenges and limitations. So, here is a breakdown of the pros and cons of content moderation—

| Pros | Cons |

|---|---|

| 1. Allows users to experience a safe and positive online space for community discussions. | 1. Difficulty in managing high volumes of daily user-generated content. |

| 2. Ensures the content adheres to the legal and regulatory community policies. | 2. Lack of contextual and cultural sensitivity. |

| 3. Maintains the integrity and reputation of the online platform. | 3. Limitations of AI models in accurately identifying the issues with content. |

| 4. Ensures the communities are inclusive and culturally aware. | 4. Difficulty in balancing free expression and user safety on the platforms. |

Unlike manual content moderation, AI models completely depend on their machine learning algorithms and training data to detect issues with the content.

So, it is absolutely necessary to train these automated models with diverse large datasets. This way, moderators can avoid biased results.

Moreover, these AI tools can be employed to use the NLP to detect harmful or non-compliant content in a jiffy.

So, detecting spam, misinformation, and hate speech from countless social media posts becomes easy and efficient. Some of its benefits and challenges include—

Now, knowing all about the content moderation is not enough. So, here are some tips to ensure you are adopting the content moderation process the right way—

So, it is pretty much clear that a hybrid content moderation process can help create a safe and positive digital space. Ensuring all users can interact with the community.

In this part, I have delved into the diverse questions around content moderation and the role of a moderator that most users look for.

As a content moderator, their role revolves around reviewing all kinds of content for diverse online platforms.

That is, they review text, images, and videos from the users. This way, they can ensure that the posts adhere to the guidelines and policies of the social media platforms.

Moreover, they identify harmful content, misinformation, and hate speech to remove them from the community posts. So, the digital space is safe to use for all kinds of users.

Given that Content Moderators need to analyze diverse kinds of content on online platforms, they require some of these skills—

• Attention to detail to identify content issues.

• Emotional resilience to handle disturbing and sensitive content.

• Making consistent and fair decisions about content.

• Clear communication of the content issues and collaboration skills.

• Multi-cultural sensitivity to understand diverse cultural contexts.

• Adaptability to the changing market and the trends of social media.

No. Unlike other content moderation methods, AI content moderation is not a fully reliable model. That is, a manual review of the moderation is a must.

So, brands and businesses can adopt the hybrid model for AI content moderation. This way, they can combine both automation and human oversight within a single system.

Generally, industries that rely heavily on user-generated content adopt content moderation methods and moderators.

That is, social media platforms, e-commerce, digital media, online gaming, and other user-submitted content. So, the moderators can manage the digital space with legal compliance.

Given that most online platforms work with free speech, using a content moderator requires some constraints.

So, here are some ways to balance the content moderation and free speech on the online platforms—

• Employing a hybrid model by combining human insight and AI automation.

• Clarifying guidelines and policies on community maintenance.

• Focusing on trending and evolving hate speech, harmful content, and cultural contexts.

• Acknowledging user safety along with open discussions.

Read Also:

Chandrima is a seasoned digital marketing professional who works with multiple brands and agencies to create compelling web content for boosting digital presence. With 3 years of experience in SEO, content marketing, and ROI-driven content, she brings effective strategies to life. Outside blogging, you can find her scrolling Instagram, obsessing over Google's algorithm changes, and keeping up with current content trends.

View all Posts

SaaS Marketing Strategy: How To Scale Your Bu...

Jan 10, 2026

Simplifying Business Operations Through Digit...

Jan 09, 2026

Hyperlocal SEO Strategies For Mid-Sized U.S. ...

Jan 09, 2026

How To Get Google Reviews To Improve Business...

Jan 08, 2026

Best A/B Testing Resources: How They Boost ...

Jan 08, 2026