Facebook Premiere: Why Digital Marketers Need It In 2026?

Jan 22, 2026

Jan 22, 2026

Jan 21, 2026

Jan 21, 2026

Jan 20, 2026

Jan 20, 2026

Jan 19, 2026

Jan 19, 2026

Jan 19, 2026

Sorry, but nothing matched your search "". Please try again with some different keywords.

Website crawling is how a search engine, like Google, uses automatic bots known as crawlers or spiders to crawl and index web pages.

It simply means crawlers scan the internet by hopping from one page to another via links, gathering data on every page’s content, structure, and relevance.

The data gathered is then indexed in the search engine; thus, when a user types a set of keywords, the site will be included in the results list.

To explain in simple terms, website crawling refers to one of the most crucial processes in SEO.

That is, it is the complete process of the search engine bots to discover new content on diverse websites and index them.

Now, this crawling does not only include textual content. That is, search engine bots crawl all kinds of content media—

So, when there is a search on anything related to these indexed or crawled web content, the search engines can pull up the content from the repository and rank it on the SERPs.

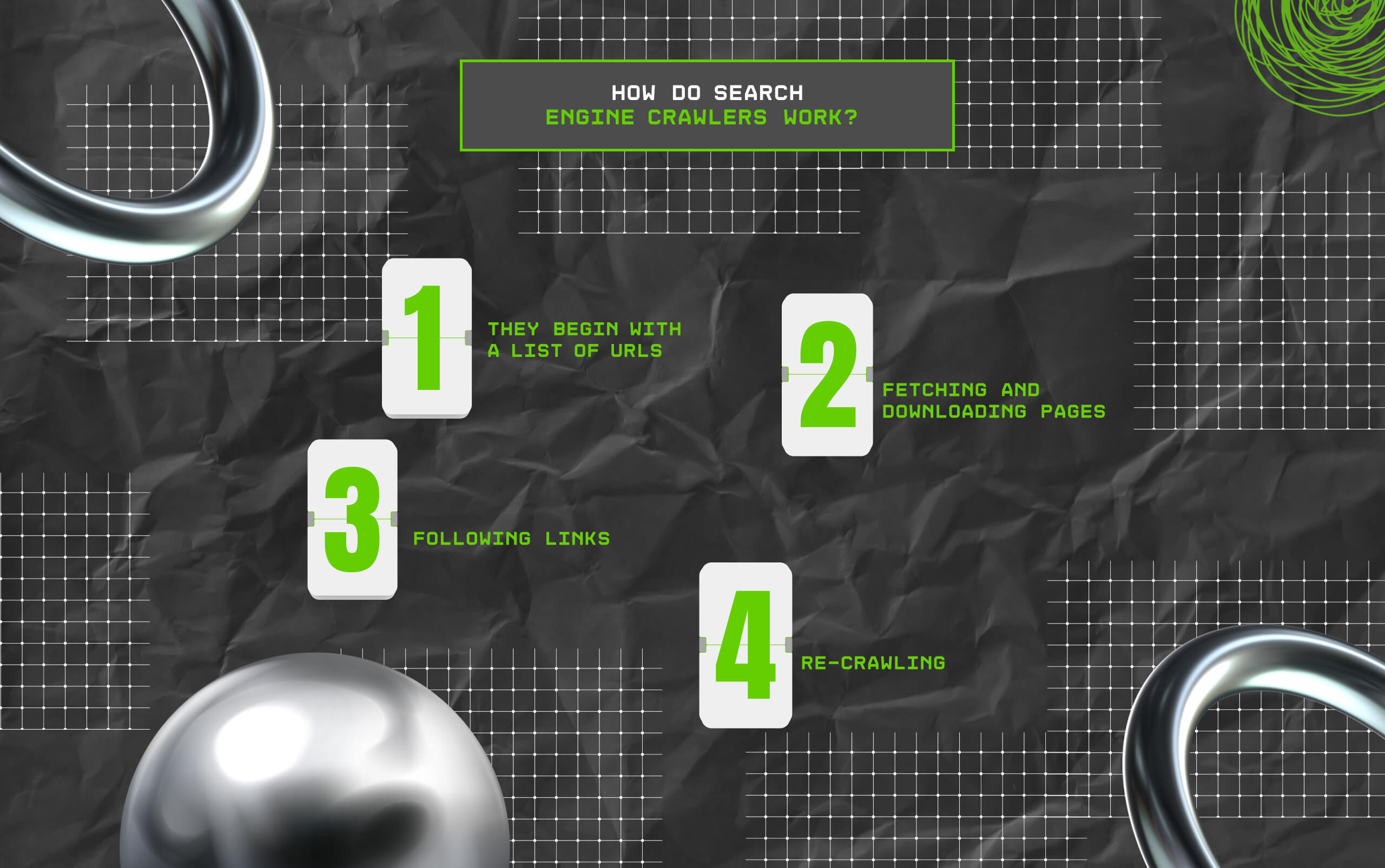

Crawlers function by following a systematic process of discovery. Here’s how it goes about things:

Crawlers are handed a list of known web pages; this list may have emerged from previous crawls or through submitted sitemaps.

They make an HTTP request to the server hosting some webpage so that they can download the content of the page.

The crawler searches for links to other pages within the page and follows them to get more content.

In the search engine, they also crawl and index all the content from all the pages, text, images, metadata, etc.

A website is revisited periodically for new information or changes made on the pages that a search engine might index.

Website crawling is vital in SEO because the search engine can understand and rank your site through it.

If crawlers were not there, search engines could not index your content, making it invisible in search results. Here’s why crawling matters:

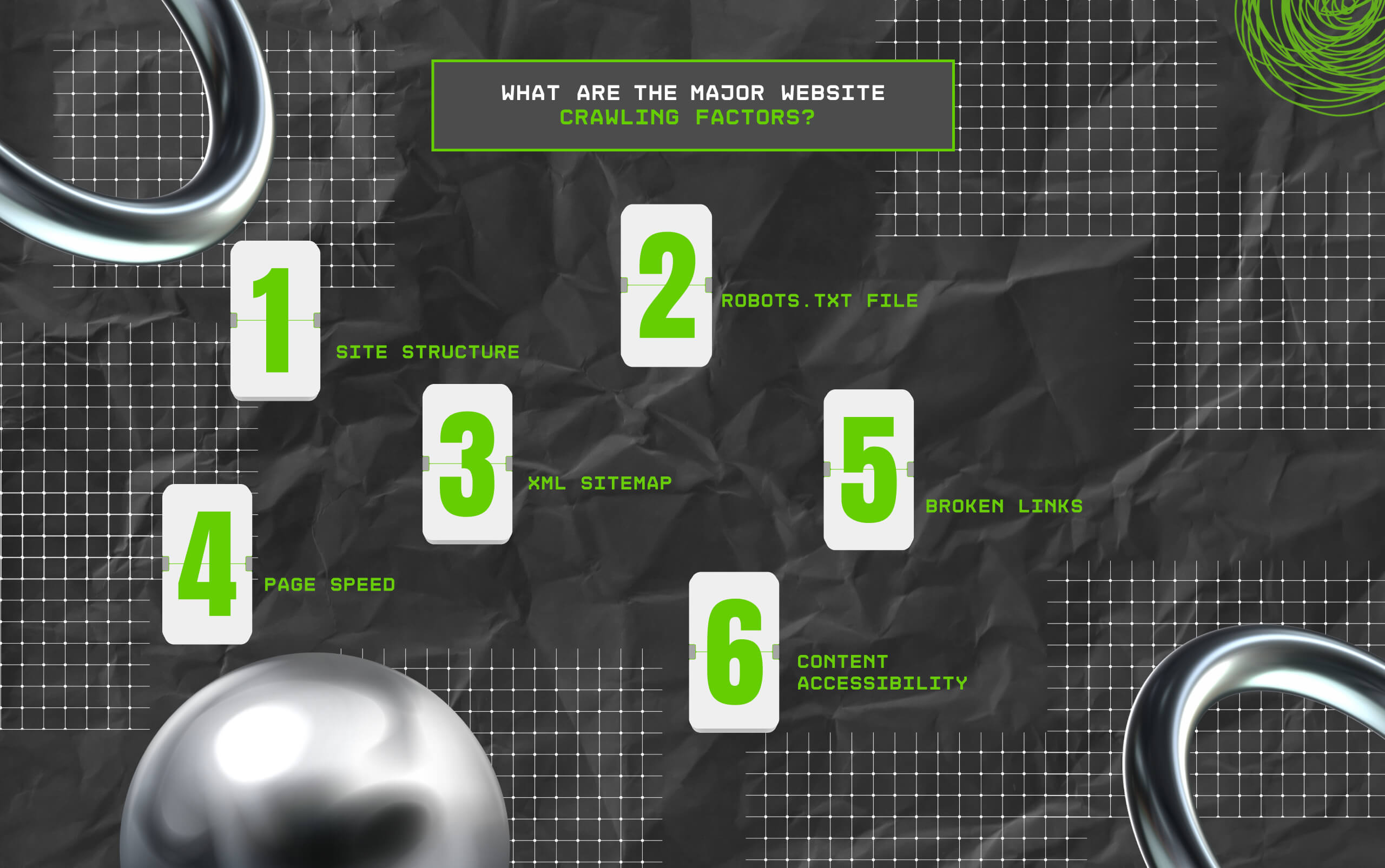

Here’s a list of factors associated with how a spider will be able to gain entry to crawl into and upon your site:

Where your website is well structured, the crawlers will be able to know which part to crawl and find out its contents.

This can be used as instructions by crawlers on which pages or parts of your site are acceptable or not allowed for crawling. Wrong settings might leave your crucial pages without indexes.

An updated XML sitemap helps the crawlers find and prioritize your most important pages.

Slow sites may hinder crawlers from effectively indexing your site because they can only access some of the content over a reasonable period.

Broken link pages make crawling inefficient because these crawlers are likely stuck and unable to access subsequent pages.

When your content is embedded behind JavaScript, Flash, or other non-crawler-friendly technologies, the search engine can have problems reading and indexing it.

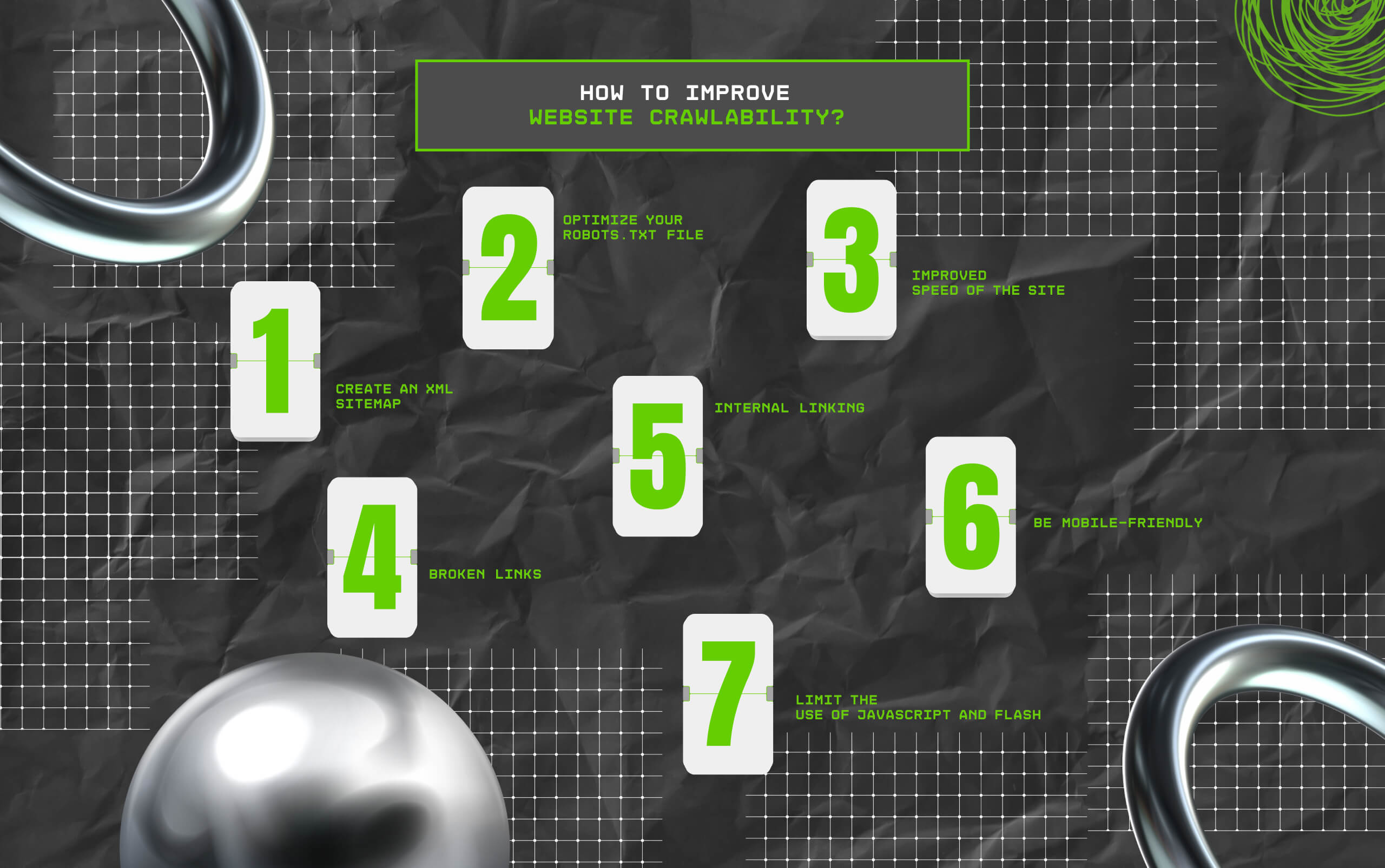

Here are a few optimization tips to make sure search engines can efficiently index your website to rank well:

Submit an XML sitemap to search engines like Google through Google Search Console. This makes it easy for crawlers to find your website’s important pages.

Ensure your robots.txt file is not blocking crawlers from important pages that need to be indexed.

Optimize images, minify JavaScript and CSS, and use caching methods. Making pages load faster is advantageous for both users and crawlers.

Try to identify broken links or 404 errors and then try to fix them. Crawlers can easily follow the links on your website.

Use a well-planned internal linking structure so the crawlers can easily follow through your site and index your pages accordingly.

As search engines do mobile-first indexing, ensure your website is mobile-friendly so the crawlers can easily access and rank your content correctly.

Crawlers can now read most scripts of JavaScript scripts, though some older crawlers have not mastered it. Avoid strong reliance on JavaScript or Flash when such content is essential, and navigation is needed.

There are many tools available to track how well search engines are crawling your site and to make changes.

This single free tool by Google would let you know how Googlebot crawls your site and what problems as a problem, such as sitemap submission.

Ahrefs has a technical SEO audit tool from where you can view which crawlability issue has more than it would hinder the indexing of your webpage.

It is a website crawler showing how search engines crawl your website in terms of broken link redirects and even metadata analysis.

SEMrush has crawl errors, duplicate content, along many other issues that prevent or affect your website.

It’s an entire website crawler that gives you great insight into crawlability and areas that need improvement.

Website Crawling is the basic process of allowing Search Engines to index content for users to find later.

The more accessible your website is to web crawlers, the higher the chance of ranking well in SERP’s. Structure, speed, and accessibility are the main factors determining how efficiently crawlers index your pages.

Optimizing your website for crawling includes using XML sitemaps, fixing broken links, and improving overall site performance.

Checking crawling performance at intervals using tools like Google Search Console and Ahrefs ensures your website is accessible to search engines and ready to perform at search rankings.

Here are some of the basic questions that most digital marketers and brands look for around the concept of website crawling.

Although it is not highlighted, web crawlers can be programmed for diverse kinds of web content crawling.

Turning them into different types of web crawlers. So, some of these types of website crawlers include—

• Focused Crawler —

This type of website crawler scrolls through a specific type of content belonging to a niche domain, topic, or based on a particular parameter.

So, it offers results based on the probability of the content.

• Incremental Crawler —

Moreover, incremental crawlers revisit the sites and crawl the content to update the indexing.

So, they can replace the older indexed content with the new and updated content to reduce unnecessary documentation.

• Distributed Crawler —

Also, the distributed crawler basically works on two different websites at the same time.

That is, they crawl multiple websites simultaneously for faster indexing and comparison of results.

• Parallel Crawler —

Similarly, the parallel crawlers work on two or more sites at the same time, next to each other, to increase the indexing speed and downloading efficiency of website crawling.

Even though most search engines have incorporated AI models for improved searches.

Now, the AI crawling is not the same as the traditional search engine crawling. So, some of the core differences between these two types of crawling are—

• Purpose —

Firstly, traditional crawling focuses on discovering and indexing the content on the internet.

But AI crawling gathers the data from multiple web pages to generate responses for the search queries.

• Depth & Content Handling —

Moreover, traditional crawling scans the entire website based on keywords and internal linking structure.

But, on the other hand, AI-based crawling dives deep into the content to interpret the data to understand the context, intent, and sentiment of the text.

• Storage & Outputs —

Finally, the traditional crawling stores the discovered content in the various searchable databases or repositories.

However, AI crawling transforms the content into embedded datasets for training the models for accuracy in the results.

Even though website crawling provides countless benefits, it comes with its own set of challenges and limitations. Some of these include—

• Regularly updating the content to keep the topic relevant for the website crawlers to index.

• Using crawler traps to stop the website crawlers from discovering or indexing the pages.

• Storing and documenting duplicate content might affect the storage quality and quantity.

• Overloading and indexing the pages can impact the bandwidth of the internet.

Chandrima is a seasoned digital marketing professional who works with multiple brands and agencies to create compelling web content for boosting digital presence. With 3 years of experience in SEO, content marketing, and ROI-driven content, she brings effective strategies to life. Outside blogging, you can find her scrolling Instagram, obsessing over Google's algorithm changes, and keeping up with current content trends.

View all Posts

Facebook Premiere: Why Digital Marketers Need...

Jan 22, 2026

What Is Popularity Link? Boosting SEO With Qu...

Jan 21, 2026

SEO Migration: What Is It & Why Is Websit...

Jan 21, 2026

Google Doodle Garden Gnomes: Is This Mini Gam...

Jan 20, 2026

Shopify Product Title SEO: How To Improve S...

Jan 20, 2026